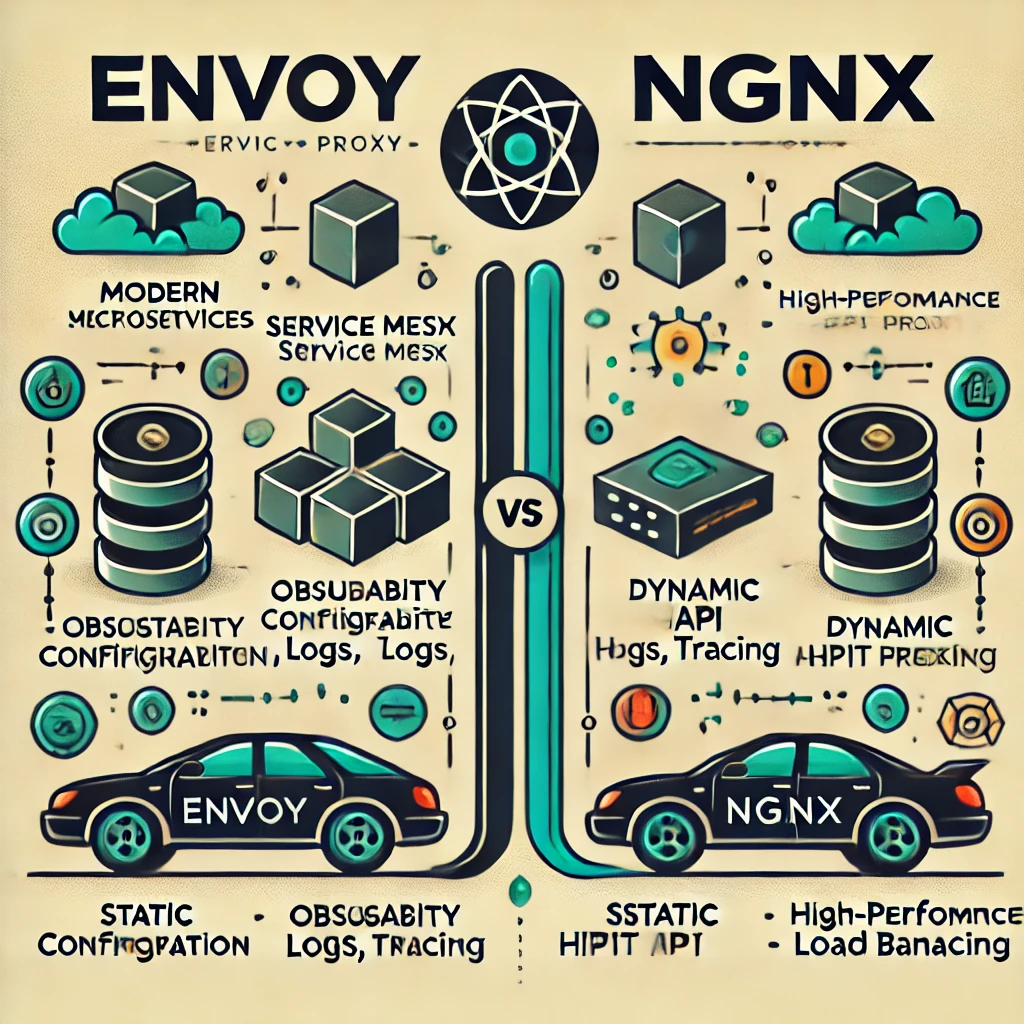

Envoy Proxy vs NGINX:哪個適合你的架構?

在現代雲原生應用和微服務架構中,選擇合適的代理(Proxy)對於效能、可擴展性和安全性至關重要。Envoy Proxy 和 NGINX 是目前市場上最受歡迎的兩個選擇。儘管兩者都具備強大的功能,但它們適用於不同的場景並遵循不同的設計理念。本篇文章將探討它們的核心差異、優勢及最佳使用案例。

概述

NGINX

NGINX 最初是一款高效能的網頁伺服器,後來發展成為強大的反向代理和負載均衡器。由於其出色的HTTP 和 TCP 處理能力,它被廣泛應用於傳統和現代 Web 應用。

Envoy Proxy

Envoy 是由 Lyft 設計的現代化高效能代理,專為雲原生架構打造。它是Istio 和 Consul 等服務網格(Service Mesh) 的關鍵組件,具備高可觀測性、動態配置及與微服務環境的深度整合。

架構與設計理念

| 特色 | Envoy Proxy | NGINX |

|---|---|---|

| 設計理念 | 專為雲原生微服務架構打造 | 最初設計為網頁伺服器,後來發展為代理 |

| 配置管理 | 支援動態服務發現與 API(xDS) | 依賴靜態配置,變更需重新載入 |

| 效能 | 針對分散式架構最佳化 | 高效能適用於傳統 Web 流量 |

| 可觀測性 | 內建監控指標、日誌與分佈式追蹤 | 基礎日誌與監控能力 |

| 擴展性 | 支援 gRPC API、過濾器與動態路由 | Lua 腳本,動態能力有限 |

配置與管理

NGINX 配置

NGINX 主要依賴靜態配置文件(nginx.conf),更改設定後需要重新載入才能生效。這對於傳統應用來說問題不大,但在動態微服務環境中可能帶來挑戰。

範例 NGINX 設定:

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

Envoy 配置

Envoy 採用更具動態性的 API 配置,例如 xDS(Extensible Discovery Service),可即時更新設定,無需重新啟動代理。

範例 Envoy 設定:

static_resources:

listeners:

- name: listener_0

address:

socket_address:

address: 0.0.0.0

port_value: 10000

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: service_backend

關鍵差異: - Envoy 支援即時動態更新,NGINX 則需手動修改配置並重新載入。 - Envoy 適用於服務網格(Service Mesh)架構,更適合微服務環境。

效能與可擴展性

- NGINX 以其高吞吐量和事件驅動架構著稱,適合用於靜態內容和傳統 Web 應用。

- Envoy 針對服務間通訊(Service-to-Service Communication) 進行優化,支援 gRPC 和 HTTP/2,並提供內建可觀測性及容錯機制。

- 延遲:NGINX 在處理靜態內容時表現稍優,而 Envoy 在動態路由與服務發現方面更具優勢。

可觀測性與監控

可觀測性(Observability)是選擇代理時的一個重要考量因素:

- NGINX 具備基本的日誌與監控功能,但需額外整合第三方工具以獲得更深入的可觀測性。

- Envoy 內建支援:

- 監控指標(Prometheus、StatsD)

- 分佈式追蹤(Zipkin、Jaeger、OpenTelemetry)

- 結構化日誌

範例 Envoy 追蹤設定:

tracing:

http:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

結論: 如果需要深度可觀測性,Envoy 會是更好的選擇。

安全性功能

| 特色 | Envoy Proxy | NGINX |

|---|---|---|

| mTLS(雙向 TLS) | 原生支援 | 需額外配置 |

| RBAC(角色存取控制) | 支援 | 不支援 |

| JWT 驗證 | 內建支援 | 需外掛 |

| WAF(Web 應用防火牆) | 無(需額外整合) | NGINX Plus 提供 |

結論: Envoy 內建較強的安全性功能,而 NGINX Plus 提供企業級 WAF(需付費)。

適用場景

何時選擇 NGINX

✅ 需要高效能的 Web 伺服器來處理 HTTP/TCP 流量。

✅ 架構為單體式(Monolithic)或傳統負載均衡模式。

✅ 需求是輕量級靜態配置,並希望減少依賴。

何時選擇 Envoy Proxy

✅ 微服務或服務網格(Service Mesh)架構。

✅ 需要動態服務發現、高級監控與追蹤功能。

✅ 應用程式依賴 gRPC、HTTP/2 或 API Gateway 模式。

結論

Envoy Proxy 和 NGINX 各有所長,適用於不同的架構與需求。

- NGINX 是傳統 Web 應用、負載均衡與反向代理的理想選擇。

- Envoy Proxy 在雲原生、微服務環境與服務網格中表現出色。

最終選擇取決於你的應用需求。如果你的應用是高度可擴展的雲原生架構,Envoy 是更好的選擇。而對於傳統 Web 工作負載,NGINX 仍然佔據主導地位。

你的選擇是什麼?

你在架構中使用的是 Envoy 還是 NGINX?歡迎在評論區分享你的經驗!