Demystifying Apache Kafka

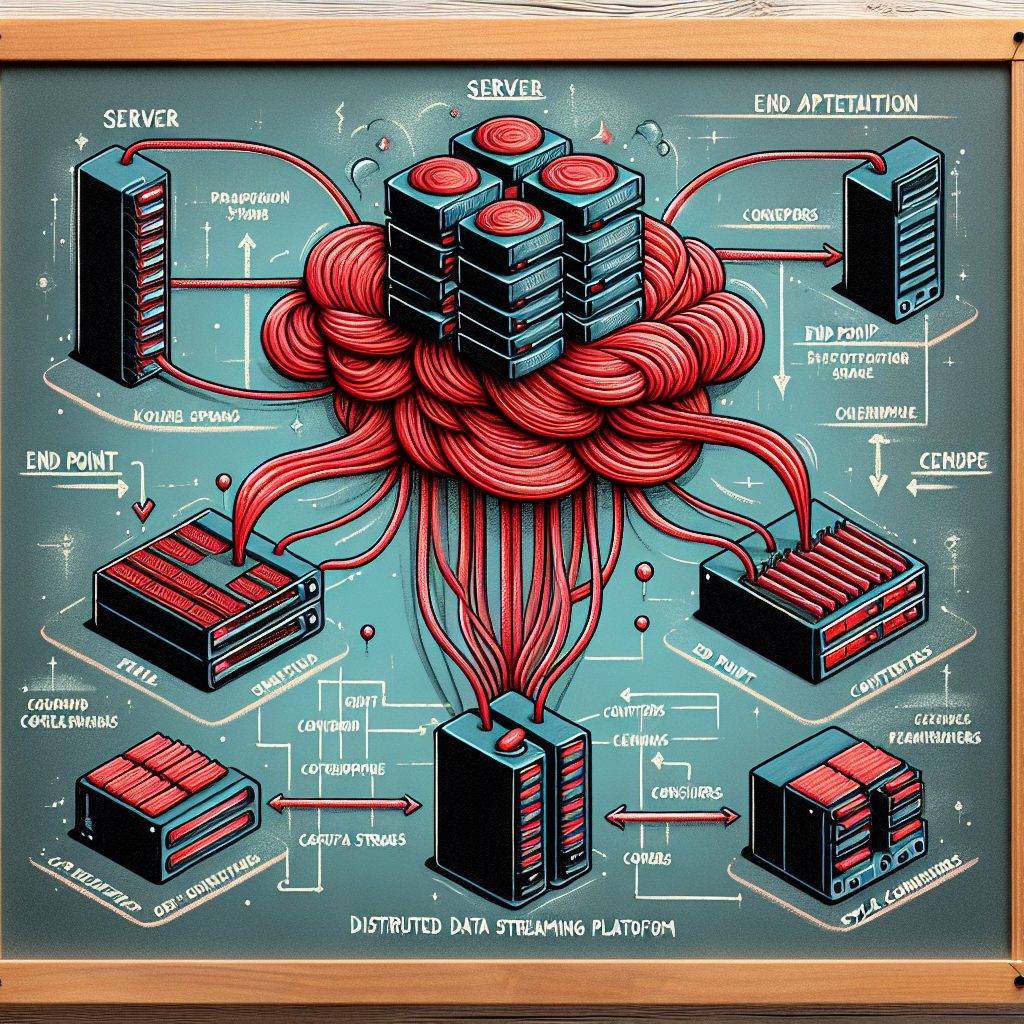

In the world of data processing and real-time event streaming, Apache Kafka has emerged as a popular distributed messaging system that allows the handling of high-throughput and low-latency data streams. In this blog post, we will take an in-depth look at the core components of Kafka, including Kafka, Zookeeper, Brokers, Topics, Kafkacat, Producers, and Consumers. Understanding these fundamental elements is essential for building scalable and robust event-driven applications.

1. Apache Kafka: The Heart of the Event-Streaming Ecosystem

Apache Kafka is an open-source, distributed streaming platform that provides a unified, fault-tolerant architecture for handling real-time data streams. It is designed to handle large volumes of data efficiently and reliably, making it a popular choice for building event-driven applications and real-time analytics pipelines.

2. Zookeeper: The Distributed Coordination Service

Zookeeper is an integral part of the Kafka ecosystem. It serves as a distributed coordination service responsible for managing and maintaining the Kafka cluster’s configuration, metadata, and state. Kafka uses Zookeeper to track the status of brokers, topics, partitions, and consumers, ensuring high availability and fault tolerance.

3. Brokers: The Backbone of Kafka Cluster

Kafka brokers are the individual nodes in the Kafka cluster that handle the storage, transmission, and replication of data. They act as intermediaries between producers and consumers, facilitating the reliable and scalable distribution of data across multiple topics and partitions.

4. Topics: The Channels for Data Stream

Topics are fundamental abstractions in Kafka. They represent individual data streams or feeds where messages are published by producers and consumed by consumers. Each message within a topic is assigned a unique offset, enabling consumers to keep track of their progress in the stream.

5. Kafkacat: A Swiss Army Knife for Kafka

Kafkacat is a powerful command-line utility that serves as a “netcat” for Apache Kafka. It allows developers to interact with Kafka topics directly from the terminal, making it a handy tool for debugging, testing, and monitoring Kafka clusters. Kafkacat can be used as a producer, consumer, or even as a message repeater, providing great flexibility in managing Kafka data.

6. Producers: Data Publishers to Kafka Topics

Producers are responsible for writing data to Kafka topics. They are the components that generate and send messages to specific topics. Producers play a crucial role in ensuring the continuous flow of data within the Kafka ecosystem, making them critical components for building event-driven applications.

7. Consumers: Data Subscribers from Kafka Topics

Consumers, on the other hand, are the recipients of the data within Kafka topics. They read messages from topics and process them as needed. Kafka supports consumer groups, enabling multiple consumers to collaborate and work in parallel to process large volumes of data effectively.

Conclusion

Apache Kafka has revolutionized the way modern applications handle data streaming and real-time event processing. Understanding the core components of Kafka, including Zookeeper, Brokers, Topics, Kafkacat, Producers, and Consumers, is essential for building robust and scalable event-driven systems.

With Kafka’s distributed architecture, fault tolerance, and high-throughput capabilities, it has become the go-to choice for building real-time data pipelines, microservices communication, and streaming analytics applications.

As the world of data continues to grow and evolve, Apache Kafka will remain a fundamental tool for developers and data engineers looking to leverage the power of real-time data streams. So, dive into the Kafka ecosystem, experiment with Kafkacat, and unleash the full potential of event-driven architectures. Happy Kafka-ing!